The BitNetMCU framework simplifies the process of training accurate neural networks for deployment on low-end microcontrollers, such as the 15-cent CH32V003.

While major releases of new generative artificial intelligence (AI) tools, like large language models and text-to-image generators, tend to grab all the headlines, smaller-scale AI tools are quietly transforming a number of industries. These powerful yet compact AI applications, known as TinyML, are already being utilized to keep manufacturing equipment in optimal condition, monitor the environment, and oversee people’s health.

New TinyML applications are being continually enabled by advancements in hardware that shrink their size, cost, and energy consumption. Furthermore, algorithmic improvements allow more powerful models to run with fewer resources. Most models are trained primarily with accuracy in mind, leading to strategies focused on reducing model size without significant drops in accuracy.

A new framework called BitNetMCU seeks to streamline the process of training and shrinking neural networks so they can run on even the lowest-end microcontrollers. The initial focus of the project is to support low-end RISC-V microcontrollers like the CH32V003, which has only 2 kilobytes of RAM and 16 kilobytes of flash memory. Moreover, this chip lacks a multiply instruction in its instruction set, which is crucial for the matrix multiplications that are key components of neural network computations. However, the CH32V003 is extremely cost-effective, priced at about 15 cents in small quantities, making it an attractive option for large, distributed networks of sensors.

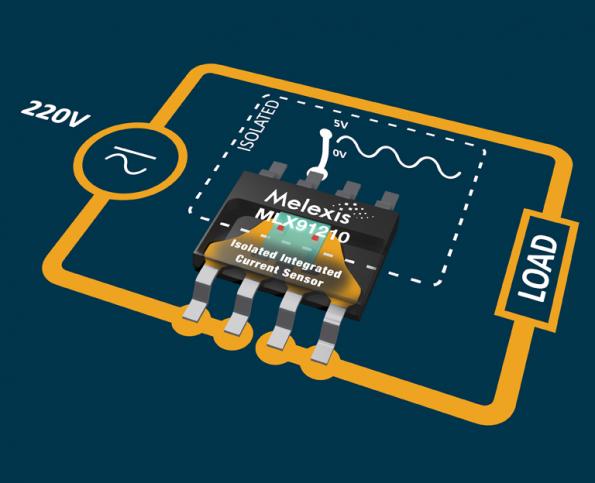

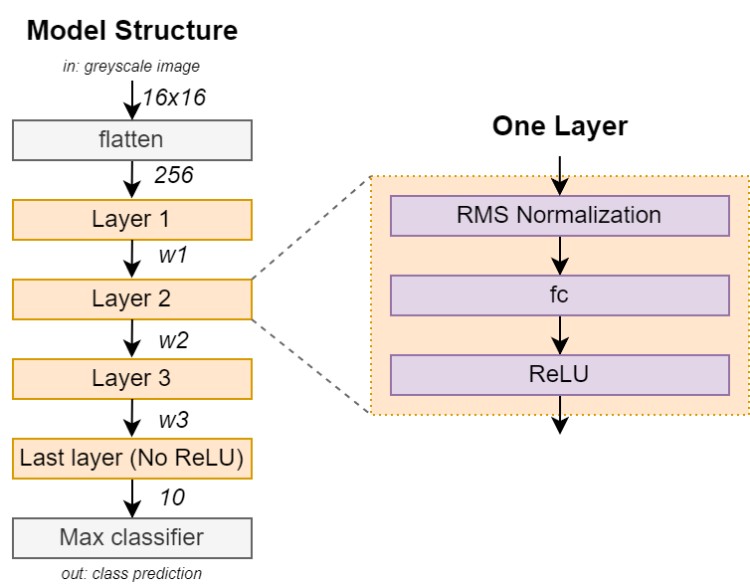

The BitNetMCU pipeline consists of a series of Python scripts that use PyTorch on the backend to train a neural network, followed by low-bit quantization to reduce the model size so it can fit on a tiny microcontroller. After training, additional scripts are available for testing the model’s performance. Once everything is optimized, another utility assists in deploying the model to a CH32V003 microcontroller. Although initially focused on this specific microcontroller, BitNetMCU‘s inference engine is implemented in ANSI C, ensuring support across a wide range of hardware platforms.

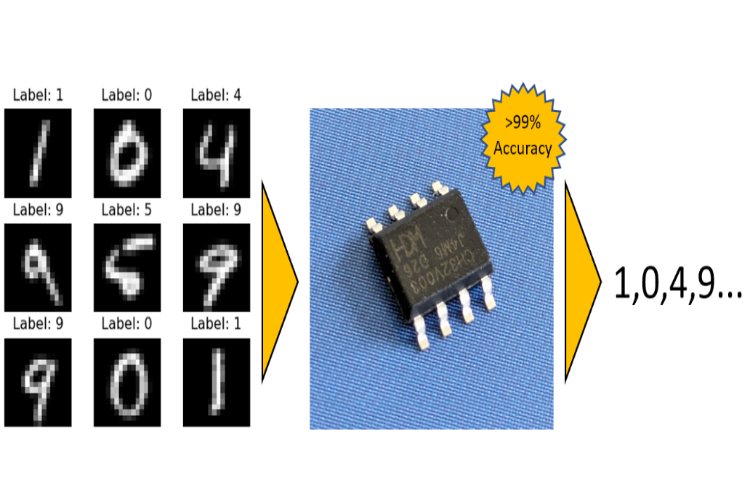

To test the system, an experiment was conducted to see how well a handwritten digit recognition model would perform against the 16×16 MNIST dataset. Due to the heavy quantization applied before deployment, the model was able to fit within the limited RAM and flash memory of the CH32V003. The chip’s lack of a multiply instruction was mitigated by using multiple additions, a workaround facilitated by the BitNetMCU framework. Testing showed that the model achieved an impressive accuracy level of better than 99 percent.

This high level of performance demonstrates the potential of BitNetMCU to make advanced AI accessible even on the most constrained hardware. The framework not only enables the deployment of sophisticated models on low-cost microcontrollers but also ensures that these models run efficiently and accurately.

The broader implications of BitNetMCU are significant. By making it easier to deploy powerful AI models on inexpensive and widely available microcontrollers, BitNetMCU opens up new possibilities for embedding intelligence in a multitude of devices. This can lead to smarter, more responsive technology in everything from industrial sensors to consumer electronics, enhancing functionality while keeping costs low.

BitNetMCU’s approach of leveraging existing AI frameworks like PyTorch and focusing on quantization techniques aligns well with the needs of developers working with constrained devices. The availability of comprehensive scripts and tools for training, testing, and deployment simplifies the workflow, allowing developers to focus on innovation rather than the intricacies of hardware limitations.

As the framework continues to evolve, it is expected to support an even wider range of microcontrollers and AI models, further democratizing the use of TinyML. With BitNetMCU, the promise of bringing AI to every corner of our world moves closer to reality, enabling smarter and more efficient technology across diverse applications.

Source code for the project is available on GitHub, and there is some fairly good documentation as well. So, if you have a few dimes burning a hole in your pocket, BitNetMCU could be a fun way to get started in the world of tinyML.