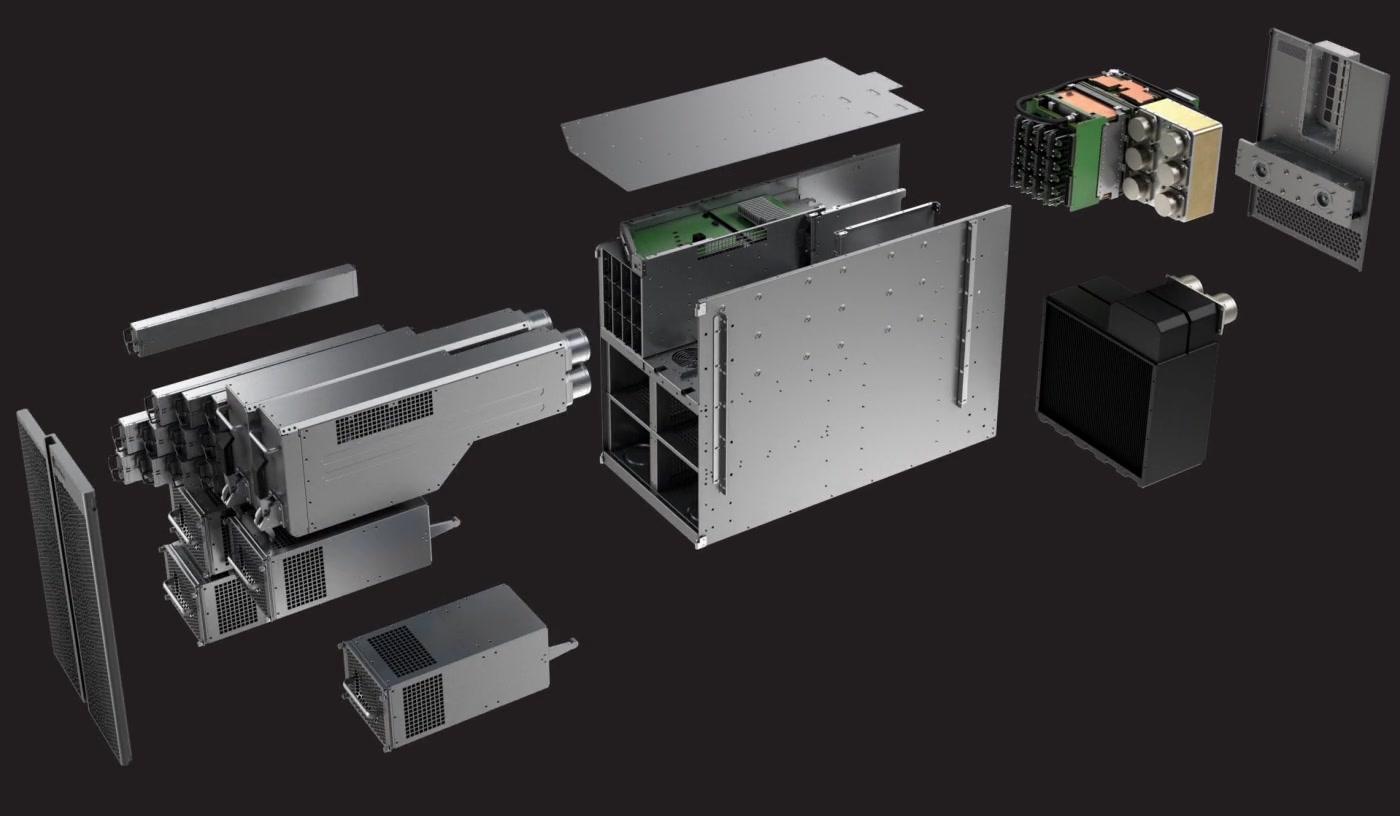

Cerebras Systems recently achieved an incredible milestone in the field of Artificial Intelligence (AI) model training. Cerebras announced the ability to train multi-billion parameter AI models on a single CS-2 system.

The Cerebras Systems claims to be able to train complex Natural Language Processing (NLP) models with up to 20 billion parameters on a single CS-2 System. Hence, reducing the system engineering time to train large AI models from months to minutes.

In NLP, the models trained on a larger amount of data perform more accurately than those trained on smaller datasets. Conventionally, the training of AI models involves the batch-processing approach. The batch-processing approach involves splitting up the data over a number of smaller processing units. Batch processing reduces the load on particular hardware and training time. However, the new record set by the Cerebras Systems enables the training of multi-billion parameter AI models on a single CS-2 System with enhanced computational speed.

As stated by Andrew Feldman, CEO, and Co-founder of Cerebras Systems, they are

“Proud to democratize access to GPT-3 1.3B, GPT-J 6B, GPT-3 13B, and GPT-NeoX 20B, enabling the entire AI ecosystem to set up large models in minutes and train them on a single CS-2”.

This engineering marvel is achieved by the amalgamation of the computational resources and small size of the Cerebras Wafer Scale Engine-2 (WSE-2) and the Weight Streaming software architecture enhancements made available by the Cerebras Software Platform, CSoft’s release of version R1.4. The Cerebras WSE-2 is one of the largest processors ever built. The computational resources of the WSE-2 enable the training of huge AI models without splitting the data over multiple GPUs.

Training Multi-Billion Parameter AI Models on a Single CS-2 System in Minutes

With the new Cerebras WSE-2, training of multi-billion parameter NLP models over a single CS-2 System is possible. The powerful WSE-2 along with the Weight Streaming software architecture enables the training and evaluation of huge AI models without the requirement of load distribution or batch processing over multiple GPUs. Moreover, it reduces the system engineering required from months to minutes. The Cerebras Systems’ WSE-2 is a revolution in the field of AI model training and evaluation.

You can learn more about the product on the Cerebras Systems’ website.