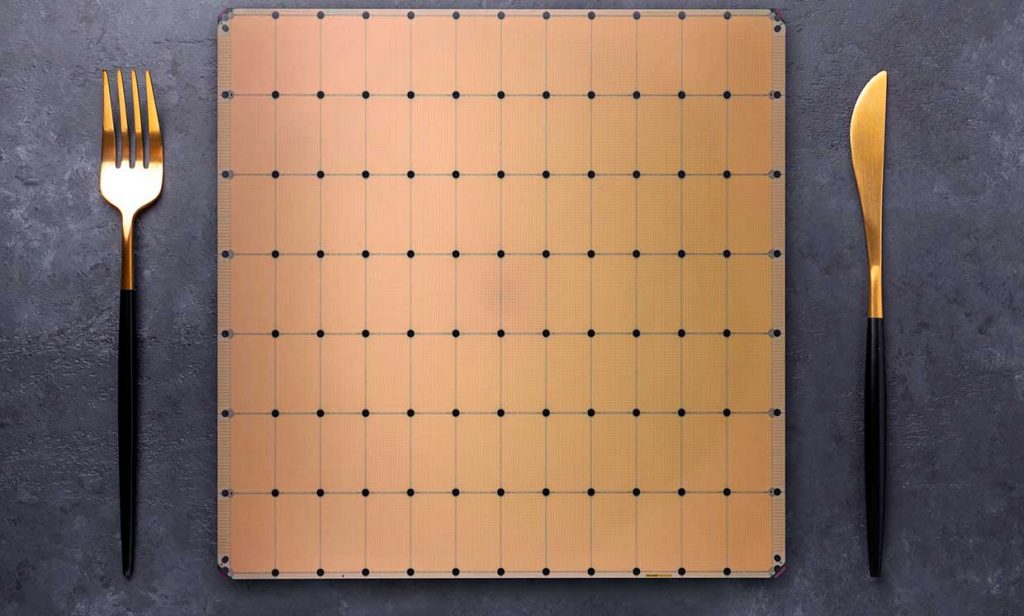

Cererbras’ All NEW Wafer Scale Engine Packs 2.6 Trillion Transistors For Deep Learning Workloads

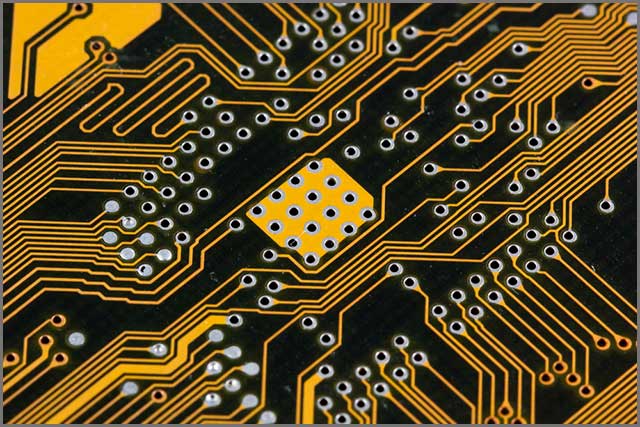

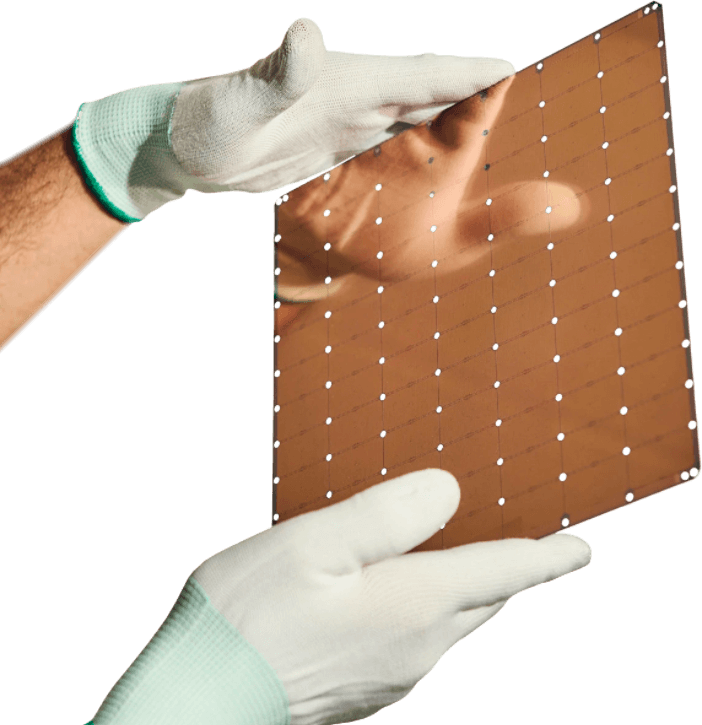

In 2019, we saw one of the largest single computer chips manufactured by a California-based AI startup, Cerebras, that unveiled the Wafer-Scale Engine for deep learning applications. The 1.2 trillion transistors-packed Wafer-Scale Engine came with 18GB of on-chip SRAM and an interconnect speed of 100 Pb/s (Petabytes per Second). After two years of development, the manufacturer has launched the next generation WSE-2 at the Linley Spring Processor Conference 2021.

To meet the computational requirements of deep learning tasks, the all-new Wafer Scale Engine comes in the same size as its predecessor of 46,255 mm2 but features more capabilities than ever before. The Cerebras Wafer Scale Engine 2 packs 2.6 trillion transistors with more than 800,000 cores, making it the most powerful single computer chip ever made that is entirely optimized for deep learning workloads.

“In AI compute, big chips are king, as they process information more quickly, producing answers in less time—and time is the enemy of progress in AI,”

Dhiraj Malik, vice president of hardware engineering, said in a statement.

With the increase in training time for deep learning models that are distributed over thousands of GPUs makes it more complex for deployment. This one device takes care of everything, “making orders-of-magnitude faster training and lower-latency inference easy to use and simple to deploy.” The Wafer Scale Engine adds 40 GB on-chip SRAM and an interconnect speed of 220 Pb/s, which is more than double the predecessor keeping the exact size of the wafer.

Like the first-generation Wafer Scale Engine, the AI-cores are easy to program with a robust Cerebras software platform integrating with widely used machine learning frameworks, including TensorFlow and PyTorch. For more details on the new high-performance Wafer Scale Engine, it is available on the product page, but no information on the pricing yet, so we expect it to be costly.