Smartphones and mobile devices are diving deeply in our lives and make a lot of things much easier than before. So, having a smartphone or a mobile device became one of life’s necessities for everybody. But unfortunately, there is still a big challenge for people with limited use of their arms to use and benefit from these devices.

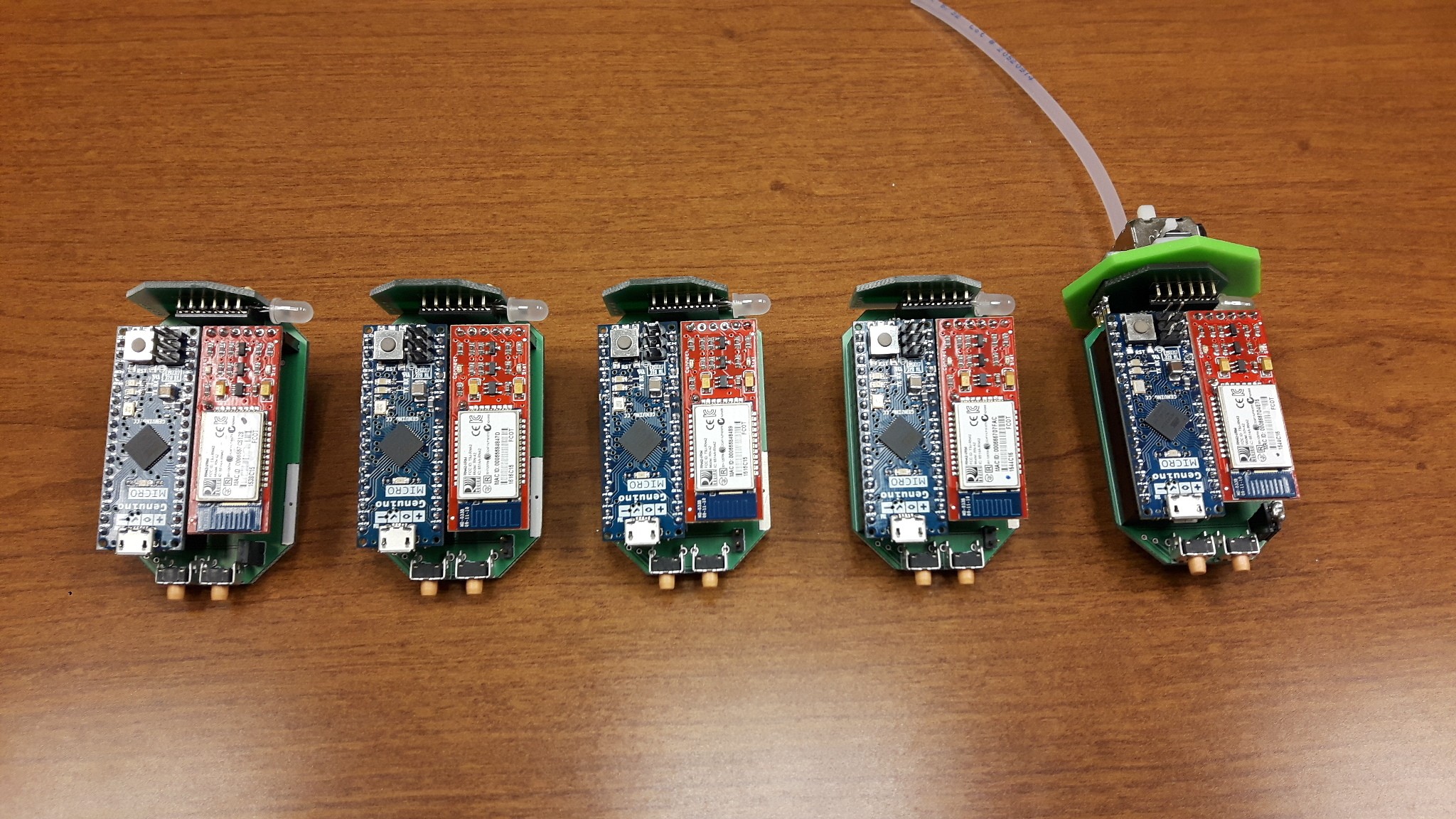

A group of developers tried to help these people and increase their accessibility to the smartphones through “LipSync”. It is an Arduino-based assistive device which aims to increase the ability to use touchscreen devices through a mouth-operated joystick with sip and puff controls.

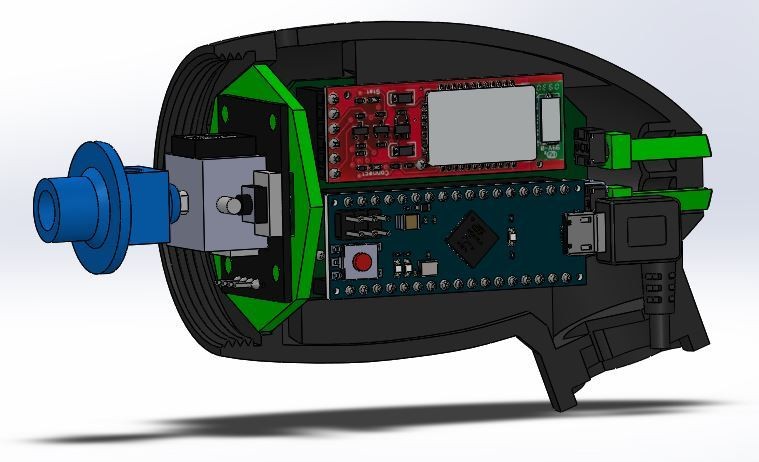

The developers team, as they mentioned in the project page, focused on creating a robust and easy to build device, designing a device housing which can be 3D printed, and making it flexible for a variety of wheelchairs.

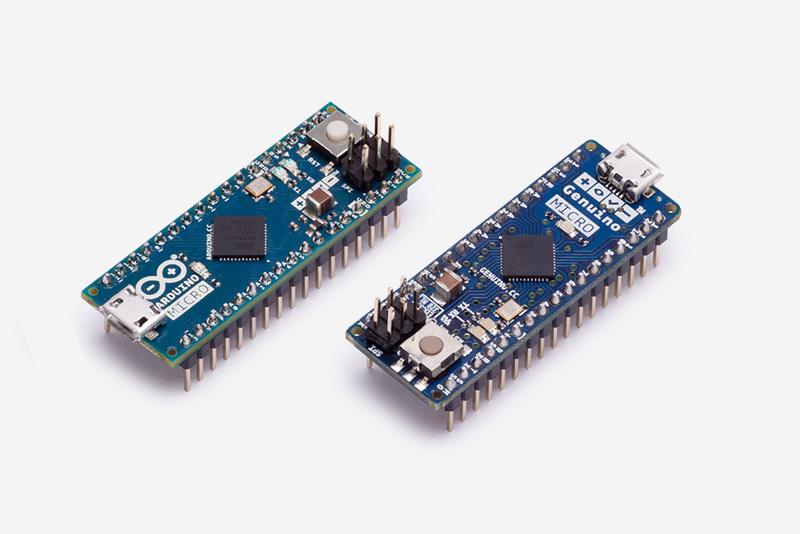

LipSync is based on Arduino Micro, a microcontroller board based on the ATmega32U4 equipped with a Bluetooth module for connecting with the smartphone and send the appropriate instructions.

Two main sensors were used in this project. An Analog 2-axis Thumb Joystick used to manipulate a cursor on the device screen, and a Pressure Sensor to catch sip and puf controls and simulate the actions of “tap” and hitting the back button, respectively.

In addition to the main control functions, move the cursor, tap, and go back, this device can simulate additional secondary functions such as “tap and drag” and “long tap and drag”.

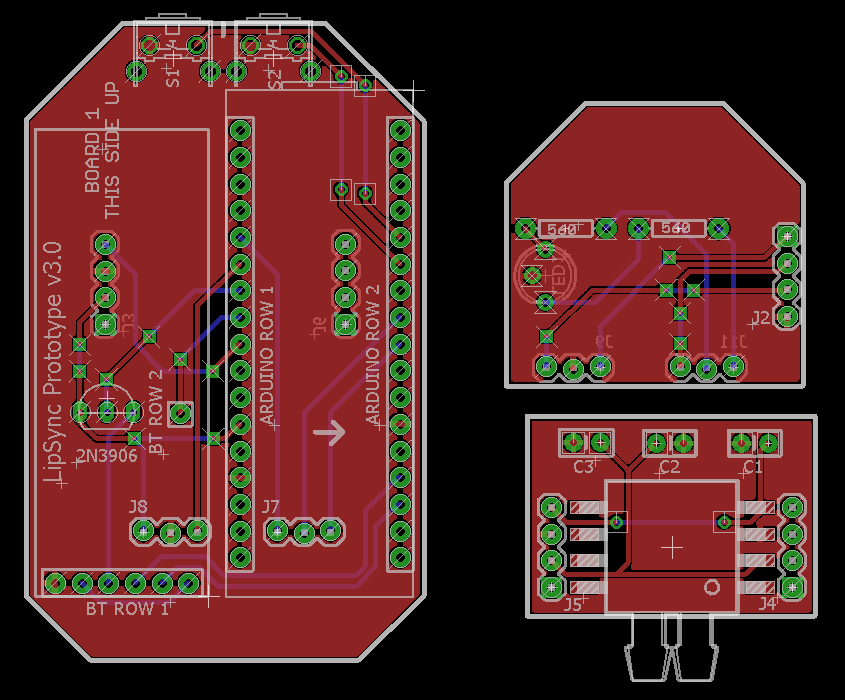

LipSync is an open source project. Schematics and PCB files are available here, but the 3D printer files and arduino code will be made public later.

To read more details about LipSync visit the project page on hackaday.io, where you can follow it and join the development team.