M5Stack Module LLM: AX630C SoC with 3.2 TOPs NPU for Offline AI Applications

M5Stack’s Module LLM is an offline Large Language Model (LLM) inference module powered by the AX630C SoC, designed for terminal devices in smart homes, voice assistants, and industrial control systems. It enables local AI processing without relying on cloud services, enhancing privacy and providing reliable standalone operation. The AX630C SoC integrates a 3.2 TOPs NPU with native support for Transformer models, allowing efficient handling of complex AI tasks. The module includes 4GB of LPDDR4 memory (1GB for applications and 3GB for hardware acceleration) and 32GB of eMMC storage, supporting smooth multitasking and data handling.

Built on TSMC’s 12nm process, the AX630C SoC achieves energy efficiency with a power consumption of just 1.5W, making it ideal for long-term use. The Module LLM supports the StackFlow framework as well as Arduino and UiFlow libraries, enabling simple integration of smart features with minimal coding. It is tailored for scenarios requiring efficient local AI inference with low power requirements and robust privacy.

M5Stack Module LLM specifications:

- SoC: Axera Tech AX630C

- Dual-core Arm Cortex-A53 @ 1.2 GHz

- 32KB I-Cache, 32KB D-Cache, 256KB L2 Cache

- Neural Processing Unit (NPU): 12.8 TOPS @ INT4 (max), 3.2 TOPS @ INT8

- Memory and Storage:

- 4GB LPDDR4 RAM (1GB for system + 3GB for hardware acceleration)

- 32GB eMMC 5.1 flash

- microSD card slot

- Imaging and Video:

- ISP: 4K @ 30fps

- Video Encoding: 4K

- Video Decoding: 1080p @ 60fps, H.264 only

- Networking: Supports single-channel RGMII / RMII PHY interface

- Audio:

- Audio Driver: AW8737

- Speaker: 8Ω @ 1W, 2014 cavity speaker

- Built-in microphone (MSM421A)

- AI Audio Features: Text-to-speech (TTS), Automatic Speech Recognition (ASR), Keyword Spotting (KWS)

- USB and Serial Connectivity:

- 1x USB OTG port

- 1x UART (default baud rate: 115200, 8N1)

- Expansion: 8-pin FPC interface for Ethernet debugging kit

- Miscellaneous:

- 3x RGB LED for status indication

- 1x Boot button

- Power Supply and Consumption:

- 5V via USB-C port

- Power Consumption: Idle – 5V @ 0.5W, Full Load – 5V @ 1.5W

- Dimension: 54 x 54 x 13 mm

- Operating Temperature: 0 to 40°C

- Product Weight: 17.4g

- Packaging Weight: 32.0g

The Module LLM offers plug-and-play compatibility with M5Stack hosts, ensuring seamless integration into existing smart devices without the need for complex configurations. It simplifies the implementation of advanced AI features, enabling smart functionality for applications such as offline voice assistants, text-to-speech conversion, smart home control, interactive robots, and more. This module enhances device intelligence and supports easy deployment in various scenarios.

The Module LLM comes pre-installed with the Qwen2.5-0.5B language model, offering features like wake-word detection, text-to-speech, and speech recognition for both standalone and pipeline systems. Future support is planned for Qwen2.5-1.5B, Llama3.2-1B, and InternVL2-1B language models. Additionally, it supports computer vision models such as CLIP and Yolo World, with upcoming updates to include Depth Anything, Segment Anything, and other advanced models, broadening its capabilities for AI-powered applications.

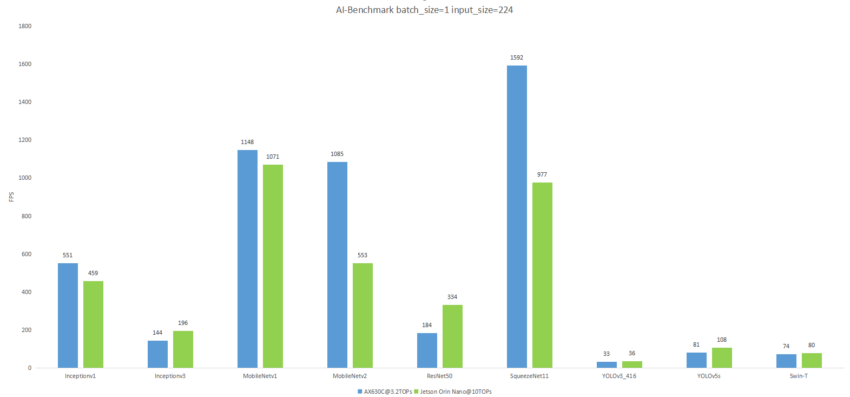

The Module LLM is integrated with the StackFlow framework and is fully compatible with Arduino and UiFlow libraries, enabling developers to incorporate AI features into their projects easily. Comprehensive tutorials and documentation are available on M5Stack’s official website to assist users with setup and integration. Additionally, the module demonstrates competitive performance when compared to the Jetson Orin Nano operating at 10W, as highlighted in the comparison chart below.

At the time of writing it is out of stock. The M5Stack Module LLM is priced at $49.90 at the official store and is also available at the Amazon M5Stack store and AliExpress M5Stack store when in stock. For more details, visit the product page.