Nvidia, is one of those companies that never ceases to amaze me. I have to say that they have technically monopolized the GPU ecosystem and now Nvidia is gaining (if not already) for a new crown – AI on the Edge.

AI in the cloud has been the buzz ever since, enabling numerous application to be able to achieve Artifical intelligence capabilities without much effort. Of course, this is a good idea, and multiple players are gaining for this. I believe cloud computing is great – but it alone isn’t enough. So many things come into place here, centralization, privacy (who really controls the data), security, and most crucial physics (there is a limit of the data we can receive and push). But AI on the Edge brings a different dimension to this, you indeed have control of so many things and most importantly build to your imagination.

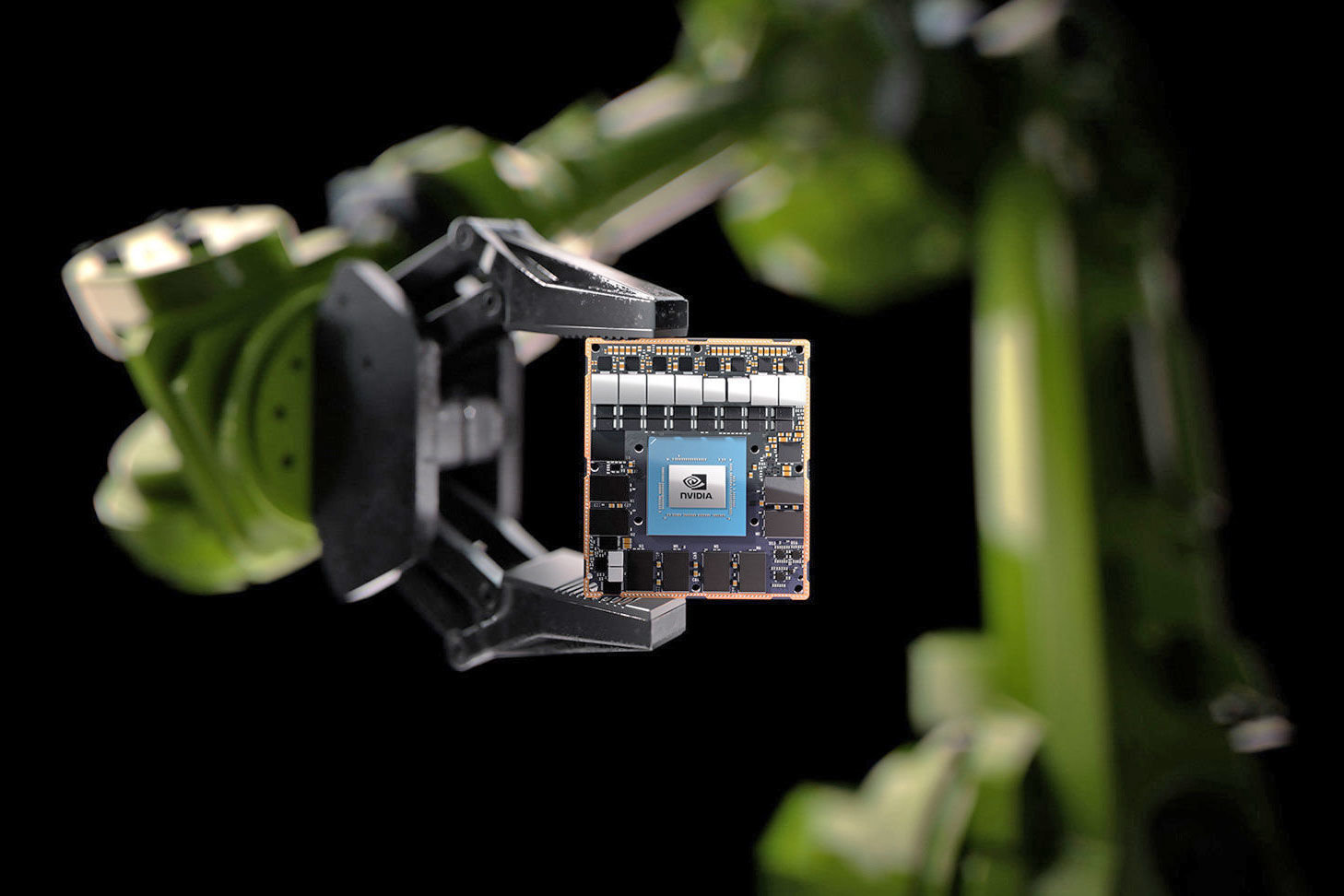

With AI on the Cloud, we put critical assets and infrastructure at risk of attack and might not even get the performance we geared for especially in the case of autonomous machines. However, Nvidia has been pushing for edge infrastructure to enable AI on the Edge with its numerous arrays of AI enabled hardware, but the unveiling of the Jetson AGX Xavier Module will be a total game changer. It promises to give robots and other intelligent machines the processing juice they will ever need for their AI brains.

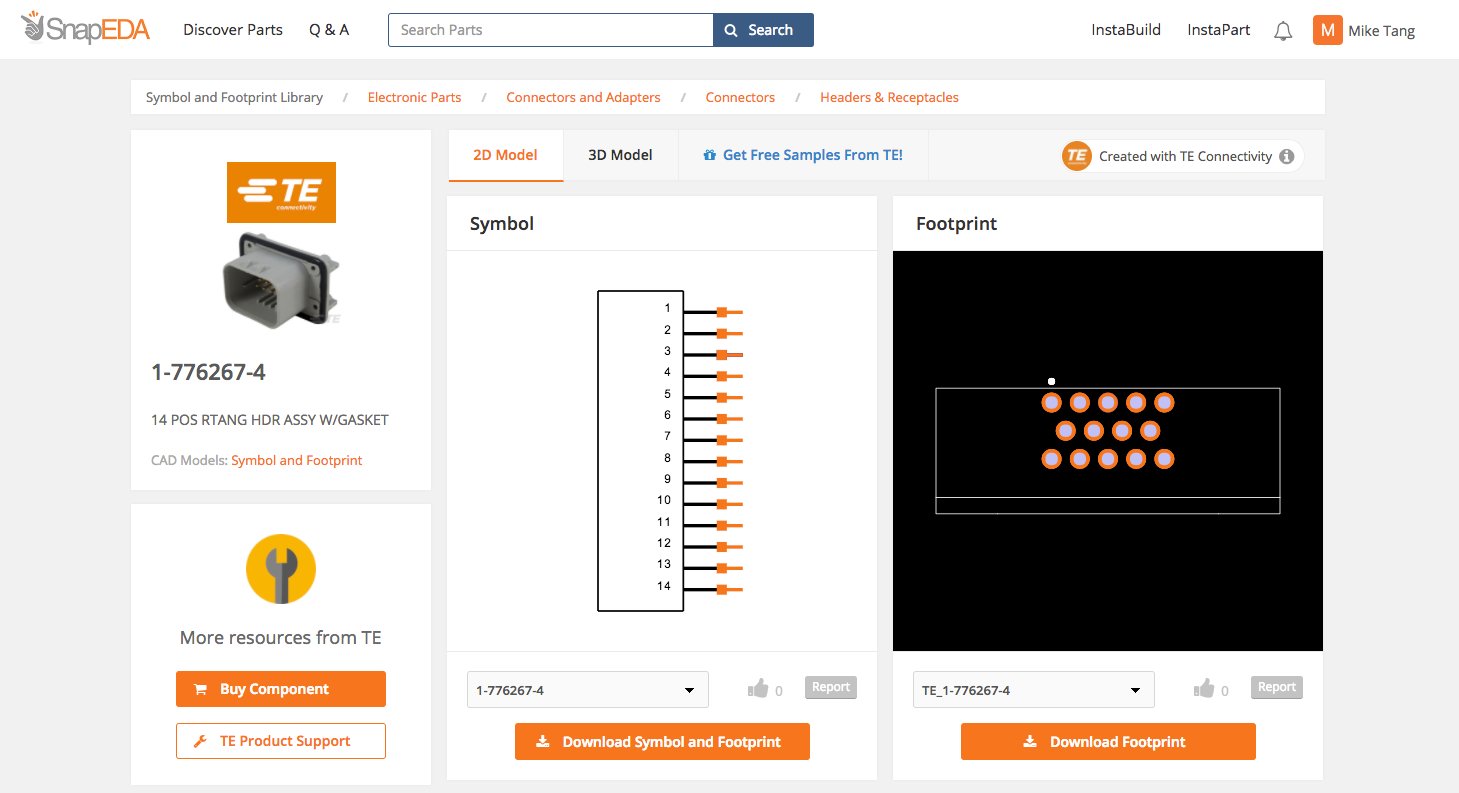

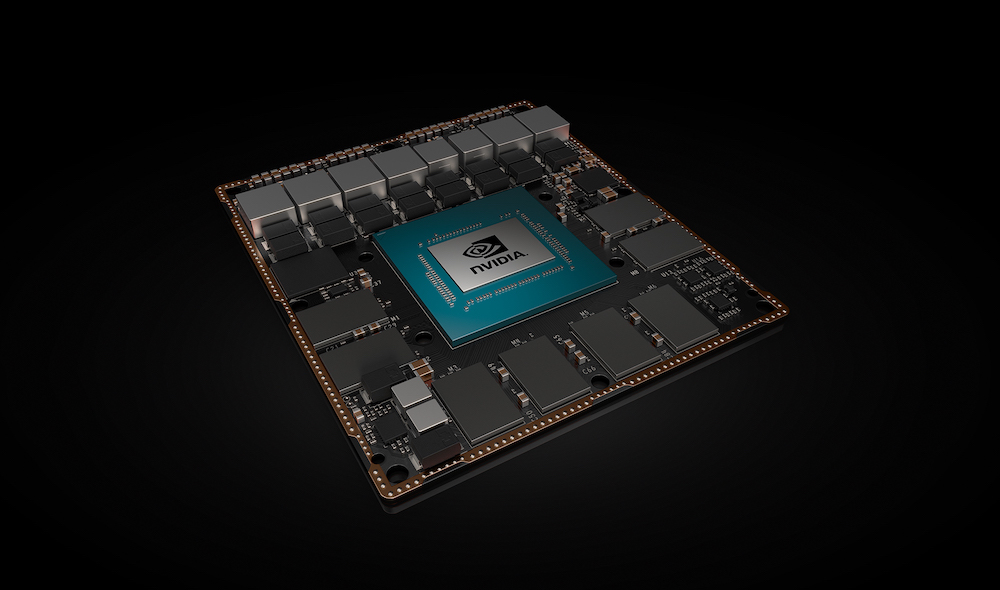

Nvidia announced the Xavier platform earlier this year and has now added the AGX to the Jetson Xavier name. The AGX Xavier module is built around the Xavier system-on-chip which relies on six processors to get its work done, including a 512-core Nvidia Volta Tensor Core GPU, an eight-core Carmel Arm64 CPU, two NVDLA deep-learning chips, and dedicated image, vision, and video processors. Here comes the impressive part of it; the AGX Xavier module delivers up to 32 TOPS (30 trillion computing operations per second) of accelerated computing capability while consuming under 30 Watts. That’s more than 20X the performance and 10X the energy efficiency of the Jetson TX2. Users can configure operating modes at 10W, 15W, and 30W as needed.

The module is available with a BSP with Nvidia’s Linux4Tegra stack, and as previously announced, Nvidia also offers an AI-focused Isaac SDK. The AGX Module is not designed for the everyday user though, and it costs $1,099 each in batches of 1,000 units. The AGX Xavier Module can handle visual odometry, sensor fusion, localization and mapping, obstacle detection, and path planning algorithms making it ideal for high demanding robots and other autonomous machines that need a lot of performance with relative low power use.

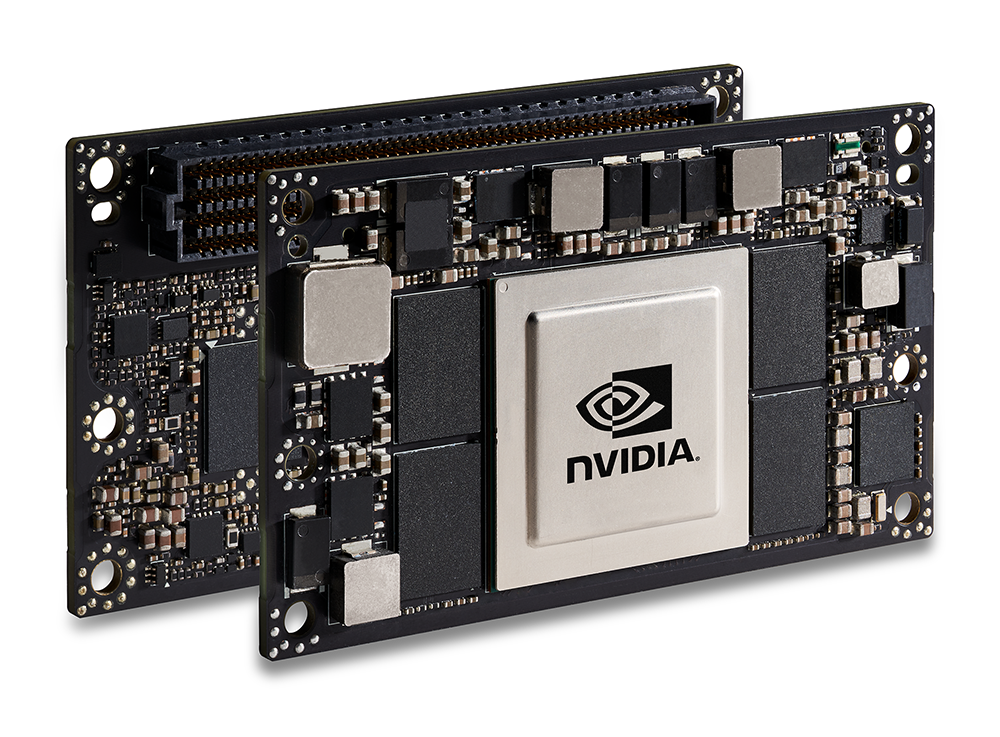

Joining the Jetson Family is the newly introduced Jetson Tx2 4GB which is expected to be cheaper than the 8GB Jetson TX2. Like the earlier TX2 modules, the 4GB version features 2x high-end “Denver 2” cores and 4x Cortex-A57 cores. You also get the 256-core Pascal GPU with CUDA libraries for running AI and machine learning algorithms. Basically the same Jetson TX2, with low memory specs.

The Nvidia’s Jetson TX2 4GB is expected to be available in June 2019 and pricing is rumored to be around $299 for 1000 volumes. The Jetson AGX Xavier is available now starting at $1,099 per module for 1,000-plus purchases. More information may be found in Nvidia’s announcement, and it’s Jetson TX2 4GB product page.

![Low Dropout (LDO) Linear Regulators Selection Guide from Analog Devices [PDF]](https://www.electronics-lab.com/wp-content/uploads/2019/06/Analog_Devices.jpg)