Researchers from the University of Chicago developed a foldable haptic actuator for mixed reality applications

Actuators are the moving part of a device that controls the mechanism of a system. A simple example of an actuator is the opening of a valve. With this simple example of a solenoidal valve, we can understand that an actuator (or, in other words, a part that performs a movement in a specific direction) needs a control signal along with the source of energy. The control signals are relatively low energy in the form of voltage or current. The control system can be software-based, a human, or any other input. Combining actuators with mixed reality applications can open up a whole new world of interaction. Mixed reality refers to combining real and virtual worlds for producing new environments and visualizations.

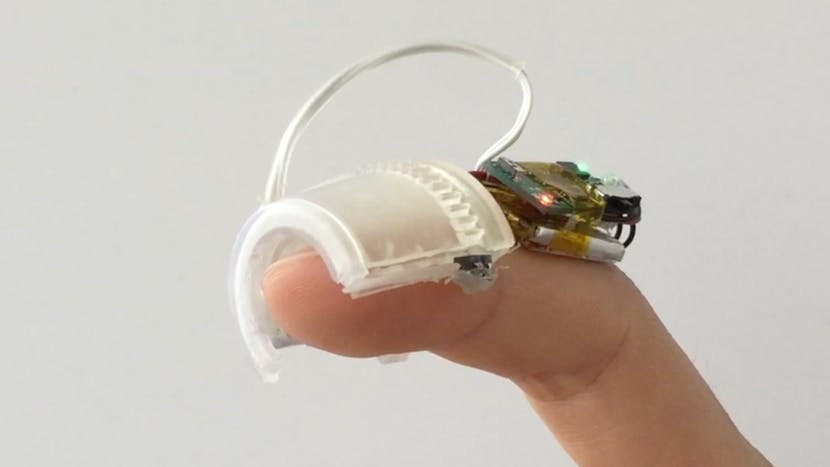

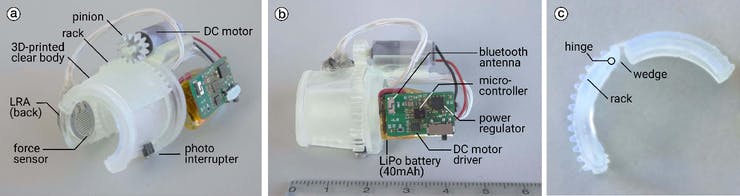

With mixed reality, physical and digital objects can co-exist and interact in real-time. A team of researchers from the University of Chicago recently published an article on a foldable haptic actuator for rendering touch in Mixed Reality. This device is developed to provide an interactive environment where the user can touch a virtual object. The device provides feedback to mixed reality (MR) environments by pressing against the user’s fingerpad when a user touches a virtual object. It provides low-frequency vibrations on the finger contact points. The device is called Touch&Fold, and it is designed to fit on a user’s fingernail. When the device is not in use (when the user is touching real-world objects), the device folds back on top of the user’s nail.

Touch&Fold features a linear resonant actuator that allows rendering not only touch contacts (i.e., pressure) but also textures (i.e., vibrations). The device is wireless and self-contained. It measures only 24×24×41 mm and weighs 9.5 g. The actuation takes only 92 milliseconds.

“Many haptic devices on the market enable users to interact with objects in the virtual world, such as controllers and gloves, but none provides the ability to interact with objects in both worlds and feel them at the same time.”

The Touch&Fold works with Microsoft HoloLens 2 headwear which displays the mixed reality environment and tracks the user’s hand in real-time with the help of depth cameras. The folding mechanism is achieved by a DC motor. The control loop consists of a force sensor FSR 400 and a photo-interrupter SG-105F. The force sensor doubles as a feedback signal for fine-tuning the amount of pressure applied to the fingerpad.

The researchers with their study demonstrate how the haptic device renders contacts with MR surfaces, buttons, low- and high-frequency textures. In the first user study, they found that participants perceived Touch&Fold to be more realistic than a previous haptic device. In the second user study, researchers found that the device allowed the participants to use the same finger to manipulate handheld tools, small objects, and even feel textures and liquids with the help of a folding mechanism while feeling haptic feedback when touching MR interfaces.

Overall this device allows the user to interact with the virtual world and quickly tucks away when the user interacts with real-world objects.

More information about Touch&Fold can be found here.