Tag: LLMs

M5Stack Module LLM: AX630C SoC with 3.2 TOPs NPU for Offline AI Applications

M5Stack's Module LLM is an offline Large Language Model (LLM) inference module powered by the AX630C SoC, designed for terminal devices in smart homes, voice assistants, and industrial control systems. It enables local AI processing without relying on cloud services, enhancing privacy...

Continue Reading

Useful Sensors release AI in a Box– Run LLM locally with no internet connection

The founders of TensorFlow, a free and open-source software library for machine learning and artificial intelligence, launched a company– Useful Sensors. The company has released a crowdfunding project called AI in a Box, designed to provide users with their own private and offline AI...

Continue Reading

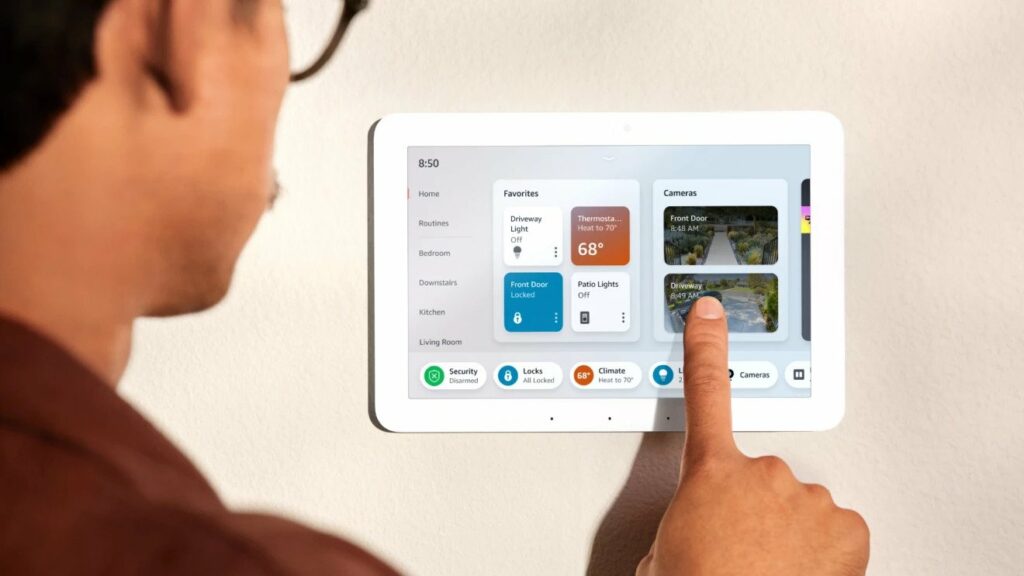

Amazon improves user experience of controlling smart homes with Alexa

Amazon highlights the challenges with your current interaction with Alexa. It believes that even though Alexa has improved the way people control their smart homes, there are still challenges. Users often have to remember specific phrases or device names, make multiple requests, or even...

Continue Reading